AI Writing Detection Capabilities

Frequently Asked Questions

How do Turnitin’s AI writing detection capabilities work?

1. Does Turnitin offer a solution to detect AI writing?

Yes. Turnitin has released its AI writing detection capabilities to help educators uphold academic integrity while ensuring that students are treated fairly.

We have added an AI writing indicator to the Similarity Report. It shows an overall percentage of the document that AI writing tools, such as ChatGPT, may have generated. The indicator further links to a report which highlights the text segments that our model predicts were written by AI. Please note, only instructors and administrators are able to see the indicator.

While Turnitin has confidence in its model, Turnitin does not make a determination of misconduct, rather it provides data for the educators to make an informed decision based on their academic and institutional policies. Hence, we must emphasize that the percentage on the AI writing indicator should not be used as the sole basis for action or a definitive grading measure by instructors.

2. How does it work?

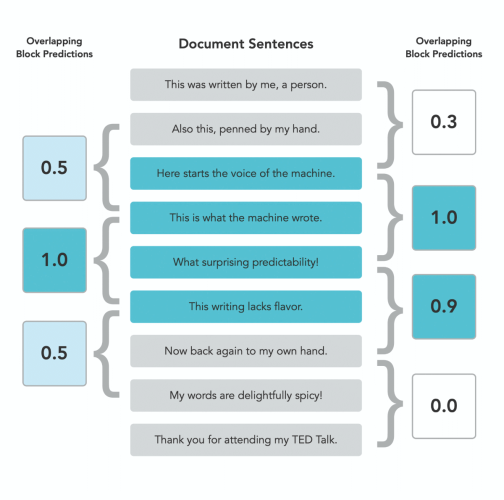

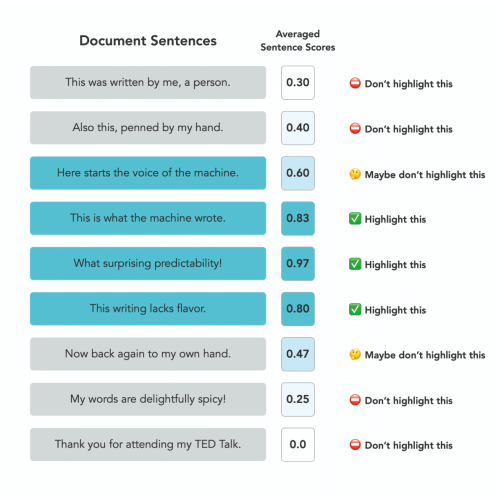

When a paper is submitted to Turnitin, the submission is first broken into segments of text that are roughly a few hundred words (about five to ten sentences). Those segments are then overlapped with each other to capture each sentence in context.

The segments are run against our AI detection model and we give each sentence a score between 0 and 1 to determine whether it is written by a human or by AI. If our model determines that a sentence was not generated by AI, it will receive a score of 0. If it determines the entirety of the sentence was generated by AI it will receive a score of 1.

Using the average scores of all the segments within the document, the model then generates an overall prediction of how much text in the submission we believe has been generated by AI.

Currently, Turnitin’s AI writing detection model is trained to detect content from the GPT-3 and GPT-3.5 language models, which includes ChatGPT. Because the writing characteristics of GPT-4 are consistent with earlier model versions, our detector is able to detect content from GPT-4 (ChatGPT Plus) most of the time. We are actively working on expanding our model to enable us to better detect content from other AI language models.

3. What parameters or flags does Turnitin’s model take into account when detecting AI writing?

GPT-3 and ChatGPT are trained on the text of the entire internet, and they are essentially taking that large amount of text and generating sequences of words based on picking the next highly probable words. This means that GPT-3 and ChatGPT tend to generate the next word in a sequence of words in a consistent and highly probable fashion. Human writing, on the other hand, tends to be inconsistent and idiosyncratic, resulting in a low probability of picking the next word the human will use in the sequence.

Our classifiers are trained to detect these differences in word probability and are adept to the particular word probability sequences of human writers.

4. How was Turnitin’s model trained?

Our model is trained on a representative sample of data spread over a period of time, that includes both AI-generated and authentic academic writing across geographies and subject areas. While creating our sample dataset, we also took into account statistically under-represented groups like second-language learners, English users from non-English speaking countries, students at colleges and universities with diverse enrollments, and less common subject areas such as anthropology, geology, sociology, and others to minimize bias when training our model.

5. Can I check past submitted assignments for AI writing?

Yes. Previously submitted assignments can be checked for AI writing detection if they’re re-submitted to Turnitin. Only assignments that are submitted after the launch of our capability (4th April 2023) are automatically checked for AI writing detection.

6. What languages are supported?

English. For the first iteration of Turnitin’s AI writing detection capabilities, we are able to detect AI writing for documents submitted in long-form English only.

7. What will happen if a non-English paper is submitted?

If a non-English paper is submitted, the detector will not process the submission. The indicator will show an empty/error state with ‘in-app’ guidance that will tell users that this capability only works for English submissions at this time. No report will be generated if the submitted content is not in English.

8. Can my institution get access to AI detection to be able to trial this new capability?

Yes, admins can set-up test accounts and allow instructors to use and assess the feature. If you’re an existing TFS customer, your admin will be able to create a sub-account and enable AI writing for only that account for testing purposes.

If you’re an Originality, Similarity or Simcheck customer, you can request test accounts by contacting your account manager or CSM.

New customers should speak to a Turnitin representative about getting a test account.

9. Can I or my admin suppress the new indicator and report if we do not want to see it?

Yes, admins have the option to enable/disable the AI writing feature from their admin settings page. Disabling the feature will remove the AI writing indicator & report from the Similarity report and it won’t be visible to instructors and admins until they enable it again.

10. Will the addition of Turnitin’s AI detection functionality to the Similarity report change my workflow or the way I use the Similarity report?

No. This additional functionality does not change the way you use the Similarity report or your existing workflows. Our AI detection capabilities have been added to the Similarity report to provide a seamless experience for our customers.

11. Will the AI detection capabilities be available via LMSs such as Moodle, Blackboard, Canvas, etc?

Yes, users will be able to see the indicator and the report via the LMS they’re using. We have made AI writing detection available via the Similarity report. There is no AI writing indicator or score embedded directly in the LMS user interface and users will need to go into the report to see the AI score.

12. Does the MS Teams integration support the AI writing detection feature?

AI writing detection is only available to instructors using the new Turnitin Feedback Studio integration. Since the MS Teams Assignment Similarity integration does not offer an instructor view due to Turnitin not receiving user metadata, AI writing detection is unavailable.

If an instructor using the Similarity integration has a concern that a report may have been written with an AI writing tool, they can request that their administrator use the paper lookup tool to view a full report.

13. How is authorship detection within Originality different from AI writing detection?

Turnitin’s AI writing detection technology is different from the technology used within Authorship (Originality). Our AI writing detection model calculates the overall percentage of text in the submitted document that was likely generated by an AI writing tool. Authorship, on the other hand, uses metadata as well as forensic language analysis to detect if the submitted assignment was written by someone other than the student. It will not be able to indicate if it was AI written; only that the content is not the student’s own work.

AI detection results & interpretation

1. What does the percentage in the AI writing detection indicator mean?

The percentage indicates the amount of qualifying text within the submission that Turnitin’s AI writing detection model determines was generated by AI. This qualifying text includes only prose sentences, meaning that we only analyze blocks of text that are written in standard grammatical sentences and do not include other types of writing such as lists, bullet points, or other non-sentence structures.

This percentage is not necessarily the percentage of the entire submission. If text within the submission is not considered long-form prose text, it will not be included.

2. What is the accuracy of Turnitin’s AI writing indicator?

We strive to maximize the effectiveness of our detector while keeping our false positive rate - incorrectly identifying fully human-written text as AI-generated - under 1% for documents with over 20% of AI writing. In other words, we might flag a human-written document as AI-written for one out of every 100 fully-human written documents.

To bolster our testing framework and diagnose statistical trends of false positives, in April 2023 we performed additional tests on 800,000 additional academic papers that were written before the release of ChatGPT to further validate our less than 1% false positive rate.

In order to maintain this low rate of 1% for false positives, there is a chance that we might miss 15% of AI written text in a document. We’re comfortable with that since we do not want to incorrectly highlight human-written text as AI-written. For example, if we identify that 50% of a document is likely written by an AI tool, it could contain as much as 65% AI writing.

We’re committed to safeguarding the interests of students while helping institutions maintain high standards of academic integrity. We will continue to adapt and optimize our model based on our learnings from real-world document submissions, and as large language models evolve to ensure we maintain this less than 1% false positive rate.

3. How does Turnitin ensure that the false positive rate for a document remains less than 1%?

Since the launch of our solution in April, we tested 800,000 academic papers that were written before the release of ChatGPT. Based on the results of these tests, we made the below updates to our model in May to ensure we hold steadfast on our objective of keeping our false positive rate below 1% for a document.

- Added an additional indicator for documents with less than 20% AI writing detected

We learned that our AI writing detection scores under 20% have a higher incidence of false positives.This is inconsistent behavior, and we will continue to test to understand the root cause. In order to reduce the likelihood of misinterpretation, we have updated the AI indicator button in the Similarity Report to contain an asterisk for percentages less than 20% to call attention to the fact that the score is less reliable. - Increased the minimum word count from 150 to 300 words

Based on our data and testing, we increased the minimum word requirement from 150 to 300 words for a document to be evaluated by our AI writing detector. Results show that our accuracy increases with just a little more text, and our goal is to focus on long-form writing. We may adjust this minimum word requirement over time based on the continuous evaluation of our model. - Changed how we aggregate sentences in the beginning and at the end of a submission

We observed a higher incidence of false positives in the first few or last few sentences of a document. Usually, this is the introduction and conclusion in a document. As a result, we changed how we aggregate these specific sentences for detection to reduce false positives.

4. The percentage shown sometimes doesn’t match the amount of text highlighted. Why is that?

Unlike our Similarity Report, the AI writing percentage does not necessarily correlate to the amount of text in the submission. Turnitin’s AI writing detection model only looks for prose sentences contained in long-form writing. Prose text contained in long-form writing means individual sentences contained in paragraphs that make up a longer piece of written work, such as an essay, a dissertation, or an article, etc. The model does not reliably detect AI-generated text in the form of non-prose, such as poetry, scripts, or code, nor does it detect short-form/unconventional writing such as bullet points or annotated bibliographies.

This means that a document containing several different writing types would result in a disparity between the percentage and the highlights.

5. What do the different indicators mean?

Upon opening the Similarity Report, after a short period of processing, the AI writing detection indicator will show one of the following:

- Blue with a percentage between 0 and 100: The submission has processed successfully. The displayed percentage indicates the amount of qualifying text within the submission that Turnitin’s AI writing detection model determines was generated by AI.

As noted previously, this percentage is not necessarily the percentage of the entire submission. If text within the submission was not considered long-form prose text, it will not be included. To explore the results of the AI writing detection capabilities, select the indicator to open the AI writing report.

Our testing has found that there is a higher incidence of false positives when the percentage is less than 20. In order to reduce the likelihood of misinterpretation, the AI indicator will display an asterisk for percentages less than 20 to call attention to the fact that the score is less reliable.

To explore the results of the AI writing detection capabilities, select the indicator to open the AI writing report. The AI writing report opens in a new tab of the window used to launch the Similarity Report. If you have a pop-up blocker installed, ensure it allows Turnitin pop-ups. - Gray with no percentage displayed (- -): The AI writing detection indicator is unable to process this submission. This can be due to one, or several, of the following reasons:

- The submission was made before the release of Turnitin’s AI writing detection capabilities. The only way to see the AI writing detection indicator/report on historical submissions is to resubmit them.

- The submission does not meet the file requirements needed to successfully process it for AI writing detection. In order for a submission to generate an AI writing report and percentage, the submission needs to meet the following requirements:

- File size must be less than 100 MB

- File must have at least 300 words of prose text in a long-form writing format

- Files must not exceed 15,000 words

- File must be written in English

- Accepted file types: .docx, .pdf, .txt, .rtf - Error ( ! ): This error means that Turnitin has failed to process the submission. Turnitin is constantly working to improve its service, but unfortunately, events like this can occur. Please try again later. If the file meets all the file requirements stated above, and this error state still shows, please get in touch through our support center so we can investigate for you.

6. What can I do if I feel that the AI writing detection indicator is incorrect? How does Turnitin’s indicator address false positives?

If you find AI written documents that we've missed, or notice authentic student work that we've predicted as AI-generated, please let us know! Your feedback is crucial in enabling us to improve our technology further. You can provide feedback via the ‘feedback’ button found in the AI writing report.

Sometimes false positives (incorrectly flagging human-written text as AI-generated), can include lists without a lot of structural variation, text that literally repeats itself, or text that has been paraphrased without developing new ideas. If our indicator shows a higher amount of AI writing in such text, we advise you to take that into consideration when looking at the percentage indicated.

In a longer document with a mix of authentic writing and AI generated text, it can be difficult to exactly determine where the AI writing begins and original writing ends, but our model should give you a reliable guide to start conversations with the submitting student.

In shorter documents where there are only a few hundred words, the prediction will be mostly "all or nothing" because we're predicting on a single segment without the opportunity to overlap. This means that some text that is a mix of AI-generated and original content could be flagged as entirely AI-generated.

Please consider these points as you are reviewing the data and following up with students or others.

7. Will students be able to see the results?

The AI writing detection indicator and report are not visible to students.

8. Does the AI Indicator automatically feed a student’s paper into a repository?

No, it does not. There is no separate repository for AI writing detection. Our AI writing detection capabilities are part of our existing similarity report workflow. When we receive submissions, they are compared and evaluated via our proprietary algorithms for both similarity text matching and the likelihood of being AI writing (generated by LLMs). Customers retain the ability to choose whether to add their student papers into the repository or not.

When AI writing detection is run on a submission, the results are shared on the similarity report - unless suppressed – and results regarding the percentage AI writing identified by the detector, along with the segments identified highly likely written by AI – are retained as part of the similarity report.

9. What is the difference between the Similarity score and the AI writing detection percentage? Are the two completely separate or do they influence each other?

The Similarity score and the AI writing detection percentage are completely independent and do not influence each other. The Similarity score indicates the percentage of matching-text found in the submitted document when compared to Turnitin’s comprehensive collection of content for similarity checking.

The AI writing detection percentage, on the other hand, shows the overall percentage of text in a submission that Turnitin’s AI writing detection model predicts was generated by AI writing tools.

10. Why do I see the AI writing score and the corresponding report on the similarity report prior to April 4?

Our AI writing detection capabilities are part of our existing similarity report workflow to detect unoriginal writing. While we released AI writing detection capabilities on April 4, 2023, prior to launch, we were preparing for the release and running our AI writing detector on a sampling of papers as part of our QA testing. This allowed us to confirm our readiness for release on April 4. As a result, you may see the AI writing score along with the corresponding report on some similarity reports submitted between March 8, 2023 and April 4, 2023.

11. Does the Turnitin model take into account that AI writing detection technology might be biased against particular subject-areas or second-language writers?

Yes, it does. One of the guiding principles of our company and of our AI team has been to minimize the risk of harm to students, especially those disadvantaged or disenfranchised by the history and structure of our society. Hence, while creating our sample dataset, we took into account statistically under-represented groups like second-language learners, English users from non-English speaking countries, students at colleges and universities with diverse enrollments and less common subject areas such as anthropology, geology, sociology, and others.

12. How can I use the AI writing detection indicator percentage in the classroom with students?

Turnitin’s AI writing detection indicator shows the percentage of text that has likely been generated by an AI writing tool while the report highlights the exact segments that seem to be AI-written. The final decision on whether any misconduct has occurred rests with the reviewer/instructor. Turnitin does not make a determination of misconduct, rather it provides data for the educators to make an informed decision based on their academic and institutional policies.

13. Can I download the AI report like the Similarity report?

Yes. The AI detection report can be downloaded as a PDF via the ‘download’ button located in the right-hand corner of the report.

Scope of detection

1. Which AI writing models can Turnitin’s technology detect?

The first iteration of Turnitin’s AI writing detection capabilities have been trained to detect models including GPT-3, GPT-3.5, and variants. Our technology can also detect other AI writing tools that are based on these models such as ChatGPT. We’ve completed our testing of GPT-4 (ChatGPT Plus), and the result is that our solution will detect text generated by GPT-4 most of the time. We plan to expand our detection capabilities to other models in the future.

2. Which model is Turnitin’s AI detection model based on?

Our model is based on an open-source foundation model from the Huggingface company. We undertook multiple rounds of carefully calibrated retraining, evaluation and fine-tuning. What we must emphasize really is that the unique power of our model arises from the carefully curated data we've used to train the model, leveraging our 20+ years of expertise in authentic student writing, along with the technology developed by us to extract the maximum predictive power from the model trained on that data. In training our model, we focused on minimizing false positives while maximizing accuracy for the latest generation of LLMs ensuring that we help educators uphold academic integrity while protecting the interests of students.

3. Is your current model able to detect GPT-4 generated text?

Yes it does, most of the time. Our AI team has conducted tests on GPT-4 using our released detector to compare its performance and understand the differences between GPT-3.5 (on which our model is trained), and GPT-4. The result is that our detector will detect text generated by GPT-4 most of the time, but we don’t have further, consistent guidance to share at this time. The free version of ChatGPT is still operating on GPT-3.5, while the paid version, ChatCPT Plus, is operating on GPT-4.

4. How will Turnitin be future-proofing for advanced versions of GPT and other large language models yet to emerge?

We recognize that Large Language Models (LLMs) are rapidly expanding and evolving, and we are already hard at work building detection systems for additional LLMs. Our focus initially has been on building and releasing an effective and reliable AI writing detector for GPT-3 and GPT-3.5, and other writing tools based on these models such as ChatGPT. Recently, we conducted tests on GPT-4, the model on which ChatGPT Plus is based, and found that our detection capabilities detected AI-generated text in most cases.

5. Will the AI percentage change over time as the detector and the models it is detecting evolve?

Yes, as we iterate and develop our model further, it is likely that our detection capabilities will also change, affecting the AI percentage. However, for a submitted document, the AI percentage will change only if it's re-submitted again to be processed.

6. Can Turnitin detect if text generated by an AI writing tool (ChatGPT, etc.) is further paraphrased using a paraphrasing tool? Will it flag the content as AI-generated even in this instance?

Our detector is trained on the outputs of GPT-3, GPT-3.5 and ChatGPT, and modifying text generated by these systems will have an impact on our detectors’ abilities to identify AI written text. In our AI Innovation Lab, we have conducted tests using open sourced paraphrasing tools (including different LLMs) and in most cases, our detector has retained its effectiveness and is able to identify text as AI-generated even when a paraphrasing tool has been used to change the AI output.

7. Does Turnitin have plans to build a solution to detect when students paraphrase content either themselves or through tools such as Quillbot, etc.,?

Turnitin has been working on building paraphrase detection capabilities – ability to detect when students have paraphrased content either with the help of paraphrasing tools or re-written it themselves – for some time now, and the technology is already producing the desired results in our AI Innovation Lab. In the instance when the student is using a word spinner or an online paraphrasing tool, the student is just running content through a word spinner which uses AI to intentionally subvert similarity detection, not using generative AI tools such as ChatGPT to create content.

We have plans for a beta release in 2023, and we will be making paraphrase detection available to instructors at institutions that are using TFS with Originality and Originality for an additional cost. It will be released first in our TFS with Originality product.

8. If students use Grammarly for grammar checks, does Turnitin detect it and flag it as AI?

No. Our detector is not tuned to target Grammarly-generated spelling, grammar, and punctuation modifications to content but rather, other AI content written by LLMs such as GPT-3.5. Based on initial tests we conducted on human-written documents with no AI-generated content in them, in most cases, changes made by Grammarly (free & premium) and/or other grammar-checking tools were not flagged as AI-written by our detector. Please note that this excludes GrammaryGo, which is a generative AI writing tool and as such content produced using this tool will likely be flagged as AI-generated by our detector.

Access & licensing

1. Who will get access to this solution? Will we need to pay more for this capability?

The first iteration of our AI writing detection indicator and report are available to our academic writing integrity customers as part of their existing licenses, so that they’re able to test the solution and see how it works. This includes customers with a license for Turnitin Feedback Studio (TFS), TFS with Originality, Turnitin Originality, Turnitin Similarity, Simcheck, Originality Check, and Originality Check+. It is available for customers using these platforms via an integration with an LMS or with Turnitin’s Core API. Please note, only instructors and administrators will be able to see the indicator and report.

Beginning January 1, 2024, only customers licensing Originality or TFS with Originality will have access to the full AI writing detection experience.

2. When can customers get access to this solution?

Turnitin’s AI writing detection capabilities are available now and have been added to the Similarity Report. Customers licensing any of the above Turnitin products should be able to see the indicator and access the AI report.

3. Is Turnitin’s AI writing detection a standalone solution or is it part of another product?

The first iteration of Turnitin’s AI writing detection capabilities is a separate feature of the Similarity Report and is available across these products: Turnitin Feedback Studio (TFS), TFS with Originality, Turnitin Originality, Turnitin Similarity, Simcheck, Originality Check, and Originality Check+. The indicator links to a report which shows the exact segments that are predicted as AI-written within the submitted content.

4. Why is AI detection not being added to other Turnitin products like Gradescope and iThenticate?

We focused our resources on, what we view, as the biggest, most acute problem and that is higher education and K12 long-form writing. We are currently investigating how we can bring AI writing detection to iThenticate customers. We do not currently have plans to add these capabilities to Gradescope, since the primary use case for Gradescope is handwritten text while for AI detection we’re focusing on typed text. However, we are happy to learn more about customer needs for AI writing detection within this product. In addition, we are not pursuing ChatGPT code detection at this time.

5. Where can I find more information about this new solution?

You can find information about Turnitin’s AI writing detection capabilities on this page.

6. I’m offended that Turnitin is making the AI writing detection free for instructors then charging for it later. It feels like Turnitin is advertising to faculty.

We made the decision to provide free access to our detection capabilities during this preview phase to support educators during this unprecedented time of rapid change. We received a significant amount of positive feedback from customers, and we acted on that feedback.

Our goal has always been to work closely with our customers to create an optimal solution for educators. We need as many educators as possible to use our AI writing detection feature quickly to gather feedback and address any gaps.

We understand that you may be apprehensive about instructors using a tool or feature that the institution may not wish to purchase in the future. However, we have invested heavily in developing and improving our AI writing detection technology over the past two years. We believe that this technology provides significant value to our customers by providing data and insights on when AI-generated content is submitted by students. This enables educators to uphold academic integrity while advancing students' learning. Nonetheless, maintaining and improving our technology requires ongoing investment as AI writing tools evolve and improve at a rapid pace over time.

The decision to move to a paid licensing structure beginning January 2024 was made to ensure that we can continue to provide high-quality AI writing detection features to our customers. This enables us to invest in further research and development and improve our infrastructure to meet the evolving needs of our customers.

7. If I opt-out of AI detection, does it mean that my students’ submissions will not be assessed by the detection tool and data retained by Turnitin?

Customers come to Turnitin to provide services that detect unoriginal writing, which, with the development of AI writing, now includes both unoriginal writing by humans and non-humans (LLMs). Our AI writing detection capabilities are part of our existing similarity report workflow. When we receive submissions, they are compared and evaluated via our proprietary algorithms for both similarity text matching and the likelihood of being AI writing (generated by LLMs). As such, suppressing the appearance of the AI writing indicator does not stop the assessment for AI writing. When AI writing detection is run on a submission, the result is shared on the similarity report, unless suppressed. When the AI writing detection is suppressed, it is simply suppressing the indicator showing the predicted percentage of AI writing; thus, the indicator will not be displayed on the similarity report, and the linking AI writing report showing the segments identified as written by AI will not be showing either. However, they are retained as part of the similarity report. Therefore when the feature is re-enabled, the AI writing score will appear on the similarity report.

This process is separate and apart from your designation of whether or not submissions can be stored in the ‘repository.’